X-treme Interfacing!!

Wheelchair users that can not move at all are not able to use conventional HCI. Voice interfaces have been used in these situations (e.g. [Simpson,98]), so that users may actually tell the robot where they want to go. These devices, like joysticks, are commercially available and, after adjusted to a given user, fairly reliable, particularly after mobile phones have included voice recognition in their operating systems (1) Technically, they are not so different from touch screens from a qualitative point of view, as, after all, the system just receives a destination.

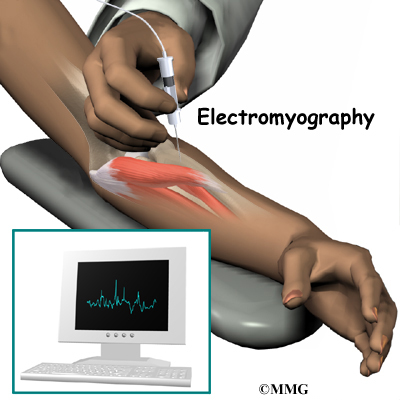

Some persons, though, have strong speech impairments and, consequently, can not use voice control as such. Voice in these cases can be complemented or replaced by other physical interfaces, controlled by head, feet, chin, shoulder switches, etc, which are fairly common, yet quite expensive in the field of assistive technologies. These interfaces are not as comfortable as the aforementioned ones but, in some cases, there may be no other choice for the user to exert some control on mobility. While it might be tempting to just intuitively choose one of these, given a particular case, it is extremely important to take into account ergonomics and medical factors. For example, some headtrackers might not be advisable for people with spinal cord injury, as they imply significant neck motion. In more critical situations, even simpler, specifically designed interfaces can be used. After studying the needs of a patient stricked by ALS, the Telethesis project decided to use an on/off switch to choose an option in a screen that is continuously renovated. If mobility is completely out of question, more invasive interfaces are still an option. Eye tracking, for example, tries to estimate where the person is looking in order to move in that direction. Some eye tracking mechanisms are based on capturing video of the person's face to check for the position of the cornea, either with natural light or structured illumination [Li,07]. Other systems, like EagleEyes, rely on electrodes to measure the EOG, which corresponds to the angle of the eyes in the head [Yanko,98]. Electromiographic sensors use probes to capture muscular activity[Mulroy,04].

In more critical situations, even simpler, specifically designed interfaces can be used. After studying the needs of a patient stricked by ALS, the Telethesis project decided to use an on/off switch to choose an option in a screen that is continuously renovated. If mobility is completely out of question, more invasive interfaces are still an option. Eye tracking, for example, tries to estimate where the person is looking in order to move in that direction. Some eye tracking mechanisms are based on capturing video of the person's face to check for the position of the cornea, either with natural light or structured illumination [Li,07]. Other systems, like EagleEyes, rely on electrodes to measure the EOG, which corresponds to the angle of the eyes in the head [Yanko,98]. Electromiographic sensors use probes to capture muscular activity[Mulroy,04].

(1) Leading companies in the voice recognition field include Microsoft Corporation (Microsoft Voice Command), Nuance Communications (Nuance Voice Control), Vito Technology (VITO Voice2Go), Speereo Software (Speereo Voice Translator) or MyCaption for BlackBerry, to name just a few.

0 comments:

Post a Comment